Assessment Optimizer Agent

Purpose: The Assessment Optimizer Agent makes the evaluation process more rigorous, fair, and scalable. It assists faculty in designing robust assessments and automating parts of grading, ensuring exams and assignments truly measure learning while maintaining academic integrity.

Core Capabilities

Question Bank Generation

Builds diverse question pools aligned with learning outcomes and cognitive levels (e.g., Bloom’s Taxonomy). For a given topic, it can generate multiple-choice questions, short answers, and problem-solving questions at varying difficulty levels, ensuring a well-rounded assessment that covers both basic understanding and higher-order thinking skills.

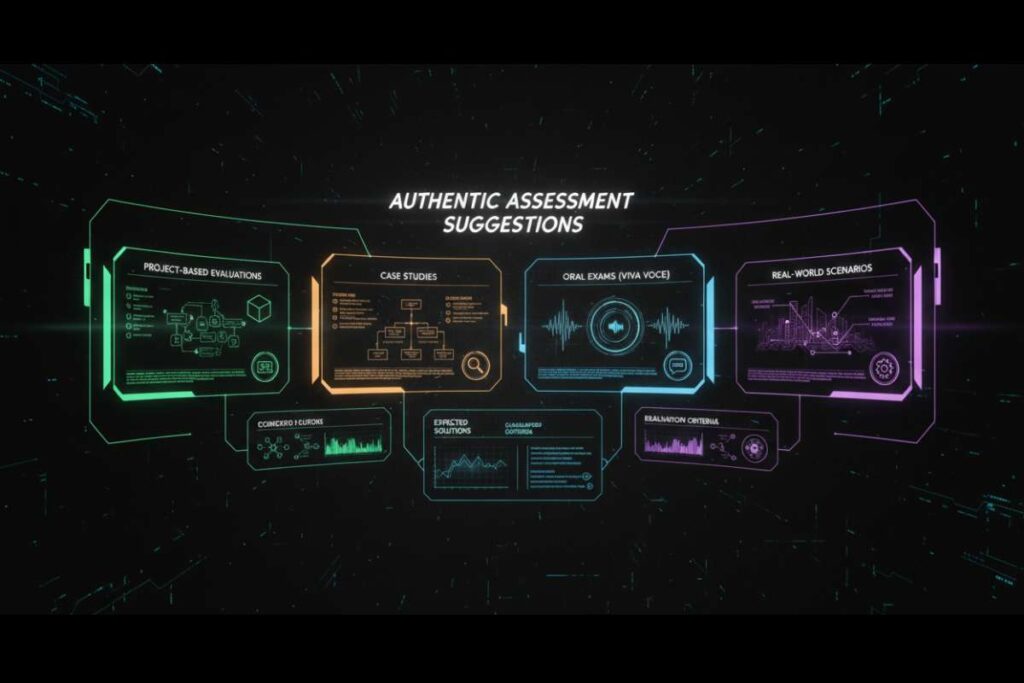

Authentic Assessment Suggestions

Proposes ideas for authentic assessments like projects, case studies, or oral exams (viva voce) that reduce opportunities for cheating. It provides prompts for project-based evaluations and even designs scenarios where students must apply concepts in real-world-like situations. Each suggestion comes with notes on expected solutions and how to evaluate them.

Rubric Enhancement

Assists in creating detailed grading rubrics. For each assignment or question type, the agent can draft rubric criteria and performance level descriptions, aligned with the intended outcomes. It also offers pre-written, rubric-based feedback statements that instructors can use when grading (e.g., feedback for common errors or exemplary answers).

Anomaly Detection

Analyzes grading patterns and outcomes to highlight any inconsistencies or anomalies. For instance, it can flag if a certain question’s scores are much lower than others (indicating a possible issue with the question), or if there are grading discrepancies between different graders/sections. This helps maintain fairness and consistency in evaluations.

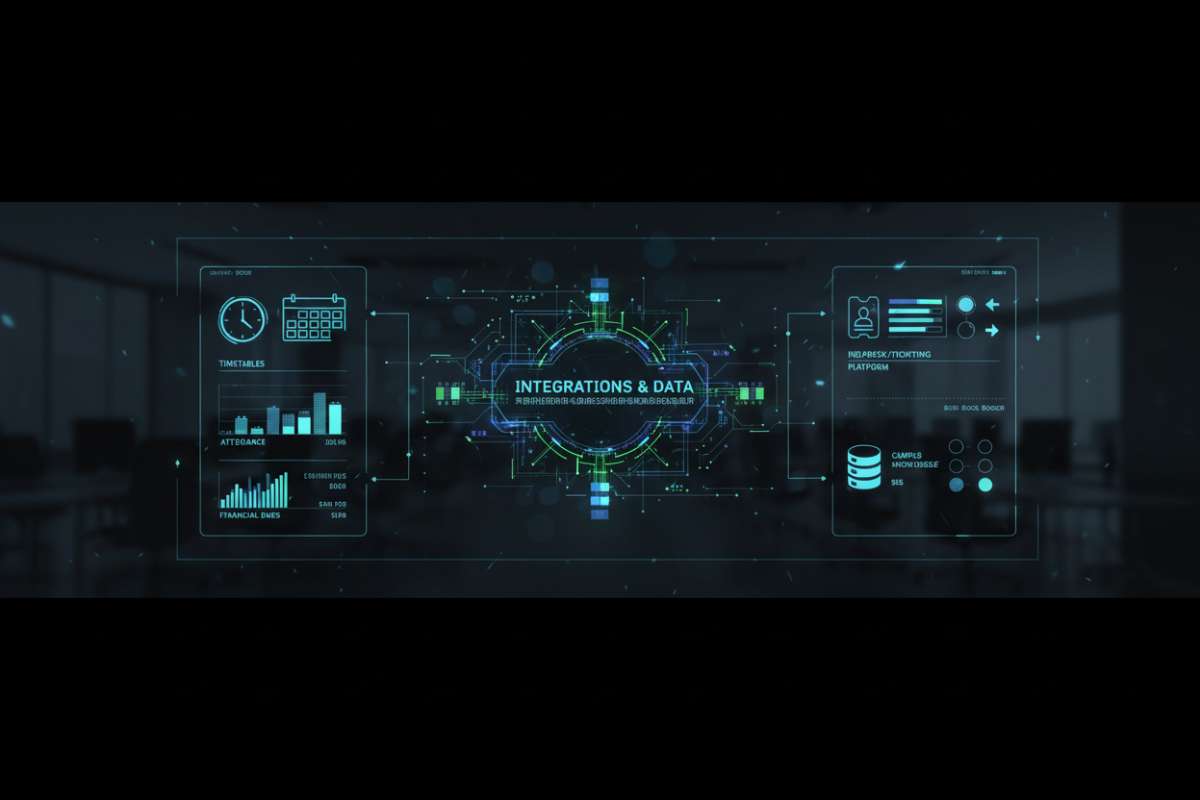

Integrations & Data

Pulls data from the LMS on past assessments and student performance to inform its suggestions (e.g., it knows what questions were used before and how students scored). Integrates with plagiarism detection tools to ensure new questions or project ideas are original or not easily answerable via the internet. Can push generated question banks into the LMS quiz engine or export them in suitable formats.

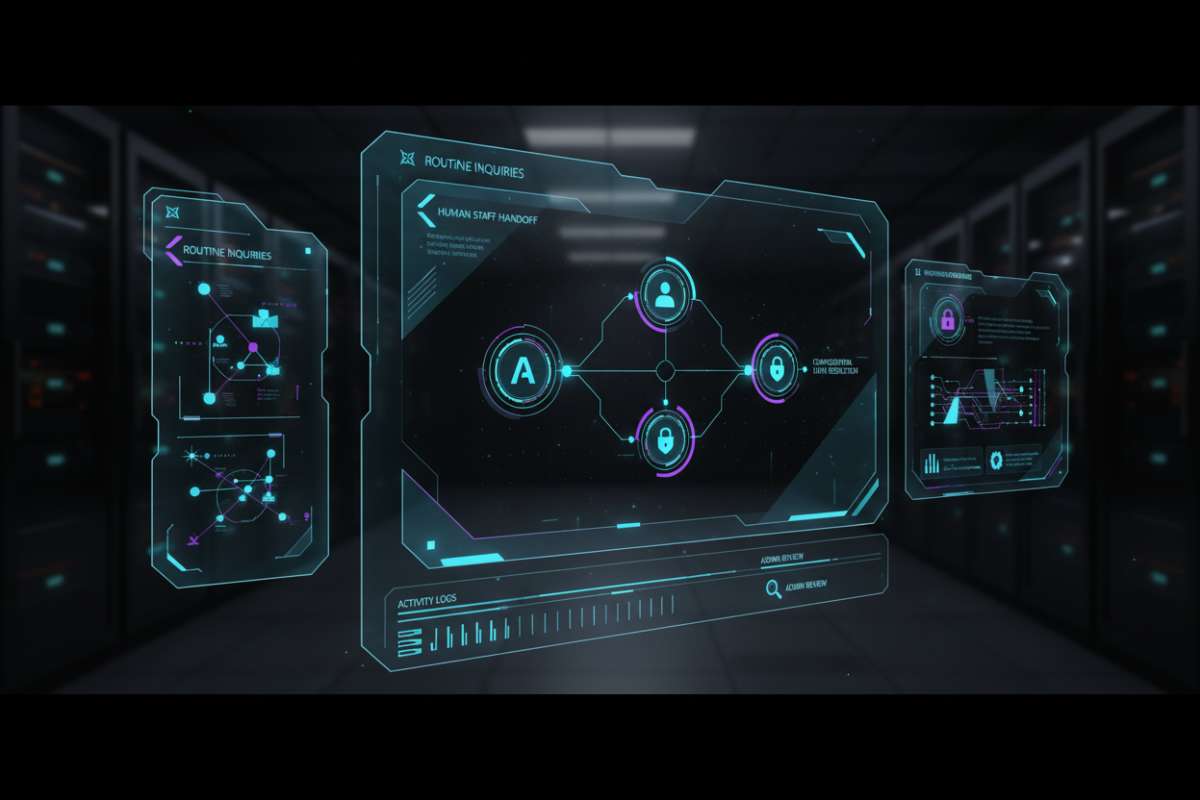

Guardrails

The agent does not make final grading decisions. It may suggest grades or flag anomalies, but instructors have the final say and must review any AI-generated grading suggestions. All new questions or tasks are reviewed by faculty to ensure they fit the course context. The agent logs any content it generates for audit, helping verify that solutions aren’t leaked or reused improperly. Also, to maintain integrity, the AI itself is restricted from accessing live exam data during an ongoing test and operates within read-only mode for grading (so it cannot alter student submissions or grades without approval).

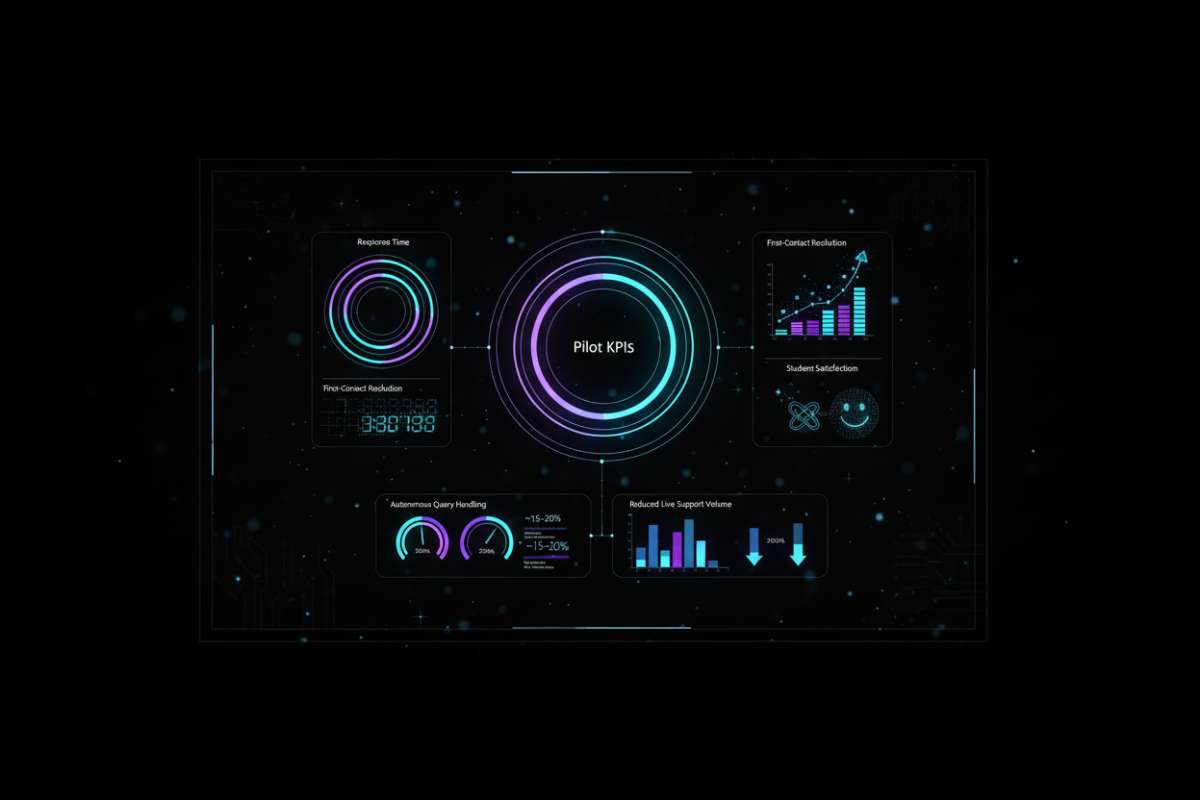

Pilot KPIs

In pilot use, rework and corrections after exams dropped significantly (e.g., fewer questions had to be thrown out or regraded) thanks to better upfront design. Time spent by faculty on creating exam papers and rubrics fell by an estimated 30%. Rubric adoption improved, leading to more consistent grading across classes. Academic integrity incidents (like cheating or unfair accusations) also declined as assessments became more cheat-resistant and transparent.